HKJ

Flashaholic

[size=+3]Charging LiFePO4 batteries, algorithms[/size]

Chargers use slightly different methods to charge LiFePO4 batteries, do this change how much energy is stuffed into the battery?

I am working on a computer controlled charger for testing and for one of the tests I decided to check this.

[size=+2]The methods

[/list]

Easy to test when you have a charger where these parameters can be adjusted.

[size=+2]The tests[/size]

All test are done as a charging, followed by a discharging and capacity measured at both operations.

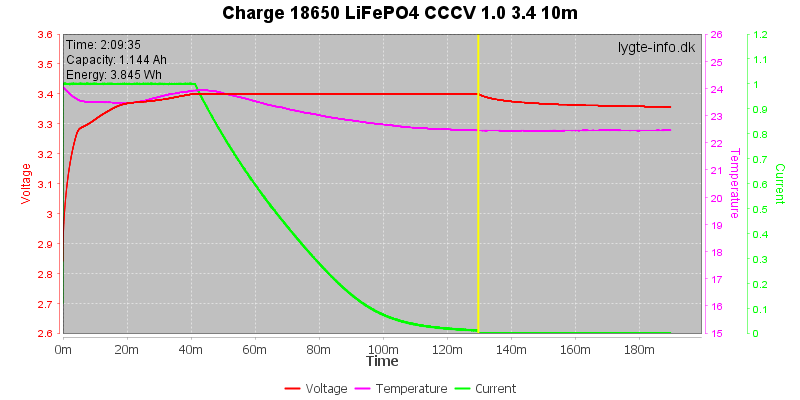

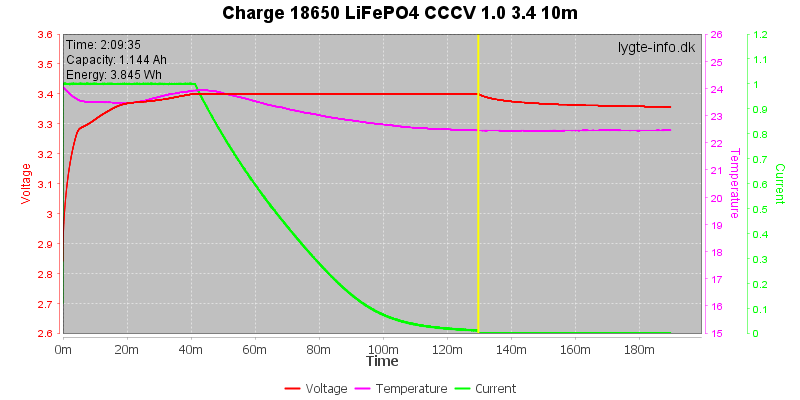

The first test to 3.4V, this is only slightly above the full cell voltage.

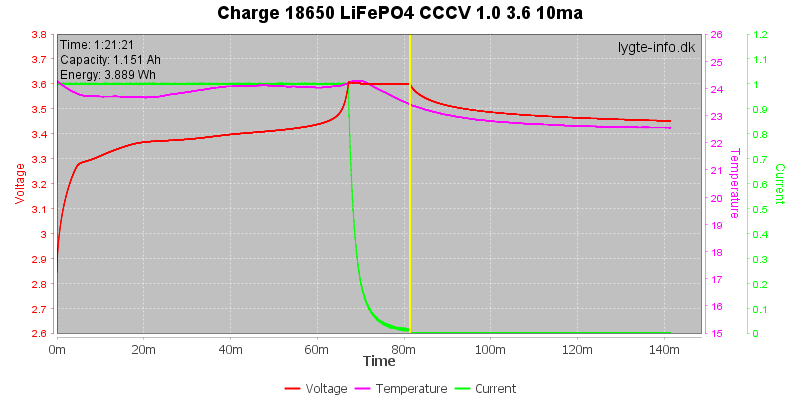

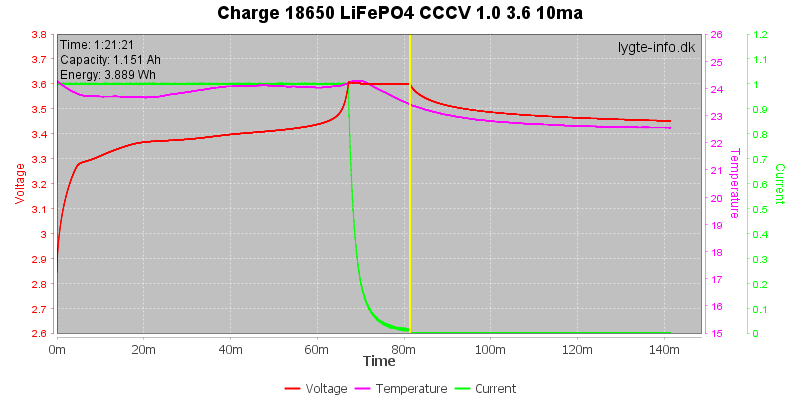

The usually voltage used for charging LiFePO4 is 3.6V or 3.65V.

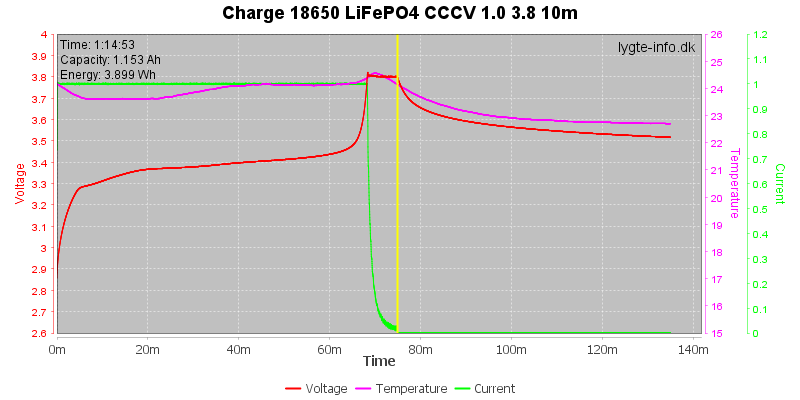

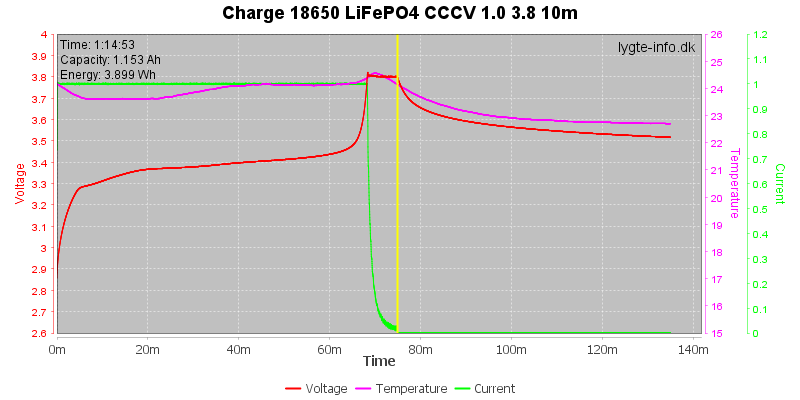

A 3.8V charging voltage is rather high, but it do not stuff more capacity into the cell. A slight temperature increase is present when the voltage goes high.

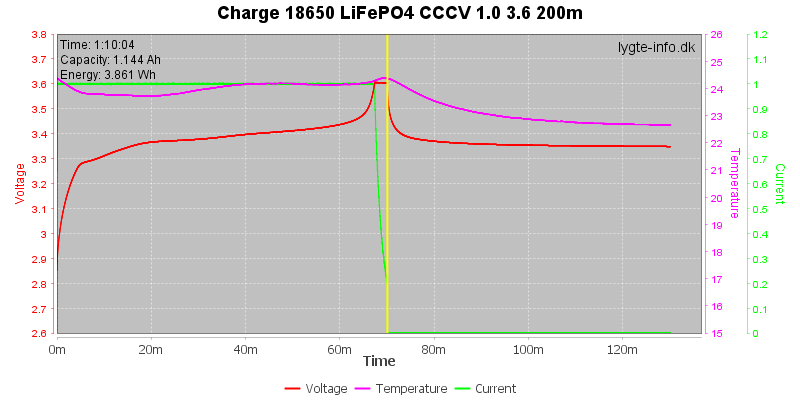

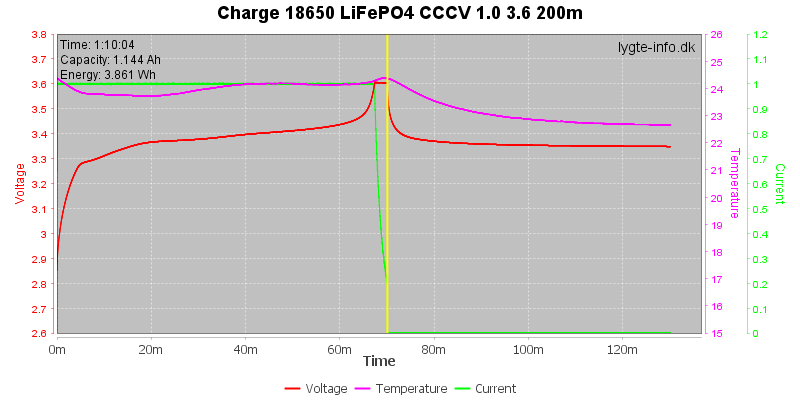

Using a faster termination, i.e. higher termination current.

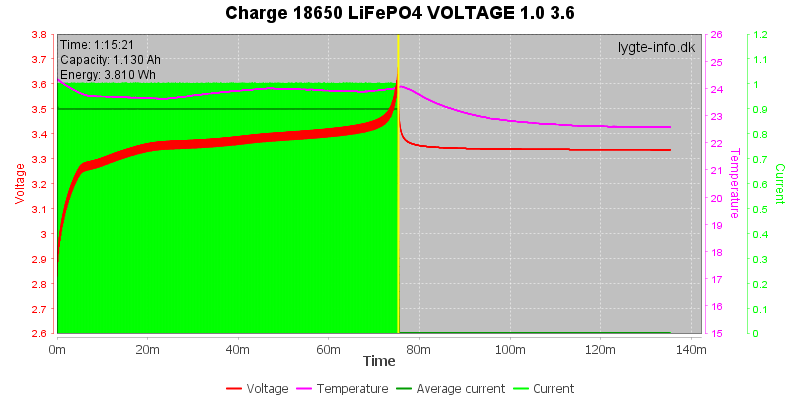

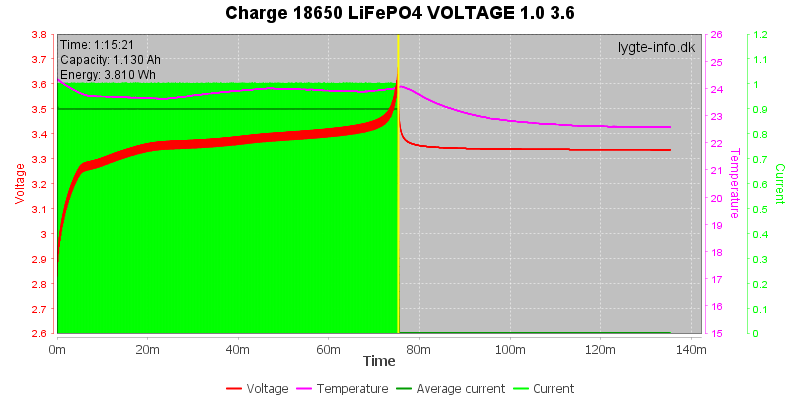

Using pulsing current and measuring the voltage when current is off, this is a fast charge method, but as can be seen above it is not faster than the other methods.

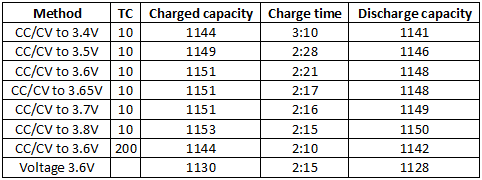

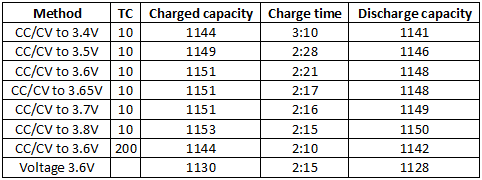

capacity is in mAh, TC is Termination Current in mA

The capacity difference between the charge voltages is very small, but using a low voltage (3.4V) means slower charging. The higher termination current do not have much influence on the charge time, but may affect capacity.

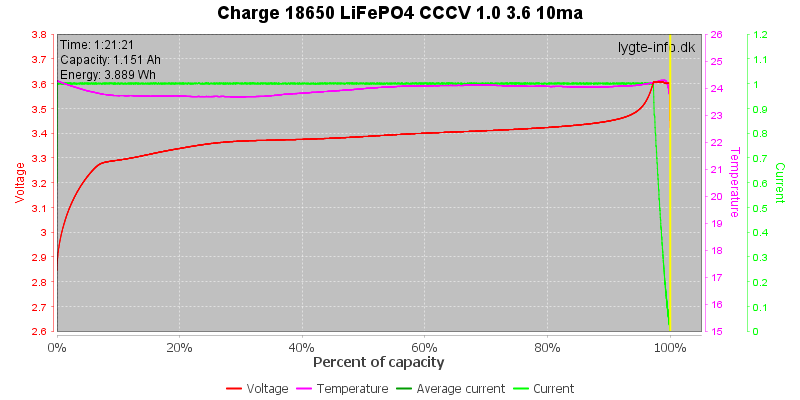

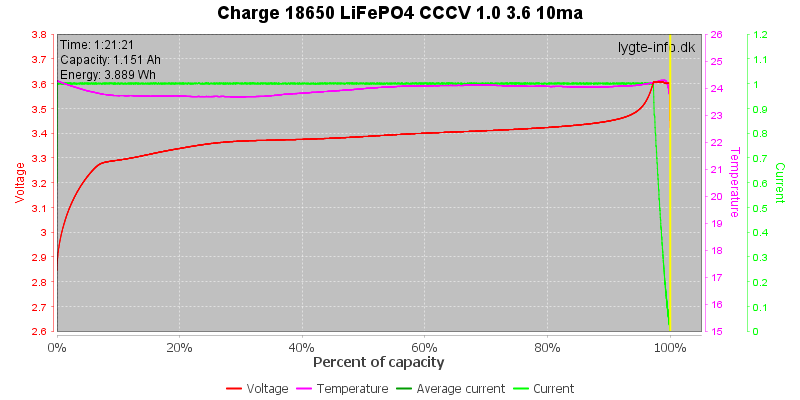

How much can a faster termination affect the end result? The current start dropping at 97.2% or 1119mAh charged, this means that even a charge without any CV (Constant voltage) phase will be within about 3% of full capacity.

[size=+3]Conclusion[/size]

This is just a simple and fast test, but it shows that the different charge methods only has a small influence on the final charge on a LiFePO4 battery.

Chargers use slightly different methods to charge LiFePO4 batteries, do this change how much energy is stuffed into the battery?

I am working on a computer controlled charger for testing and for one of the tests I decided to check this.

[size=+2]The methods

[/list]

- CC/CV voltage with 3.4 to 3.8 volt as target and a very low current termination

- CC/CV voltage with 3.6 volt as target and a high termination current.

- Voltage charge, i.e. check battery voltage with current off.

Easy to test when you have a charger where these parameters can be adjusted.

[size=+2]The tests[/size]

All test are done as a charging, followed by a discharging and capacity measured at both operations.

The first test to 3.4V, this is only slightly above the full cell voltage.

The usually voltage used for charging LiFePO4 is 3.6V or 3.65V.

A 3.8V charging voltage is rather high, but it do not stuff more capacity into the cell. A slight temperature increase is present when the voltage goes high.

Using a faster termination, i.e. higher termination current.

Using pulsing current and measuring the voltage when current is off, this is a fast charge method, but as can be seen above it is not faster than the other methods.

capacity is in mAh, TC is Termination Current in mA

The capacity difference between the charge voltages is very small, but using a low voltage (3.4V) means slower charging. The higher termination current do not have much influence on the charge time, but may affect capacity.

How much can a faster termination affect the end result? The current start dropping at 97.2% or 1119mAh charged, this means that even a charge without any CV (Constant voltage) phase will be within about 3% of full capacity.

[size=+3]Conclusion[/size]

This is just a simple and fast test, but it shows that the different charge methods only has a small influence on the final charge on a LiFePO4 battery.

Hopefully I can learn from this thread.

Hopefully I can learn from this thread.