You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

How bright are high CRI LEDs?

- Thread starter Yavox

- Start date

Help Support Candle Power Flashlight Forum

Help Support Candle Power Flashlight Forum

FloggedSynapse

Enlightened

Not as bright as the cool white ones. More light is lost/wasted for the high CRI lights. Hopefully you'll not loose more than 25-40% of your power (lumens) compared to a cool white LED.

Look at the first page here to see the efficiency of the high CRI leds (neutral, warm white) compared to the lower CRI LEDs (cool white):

http://www.cree.com/products/xlamp7090_xre.asp

Look at the first page here to see the efficiency of the high CRI leds (neutral, warm white) compared to the lower CRI LEDs (cool white):

http://www.cree.com/products/xlamp7090_xre.asp

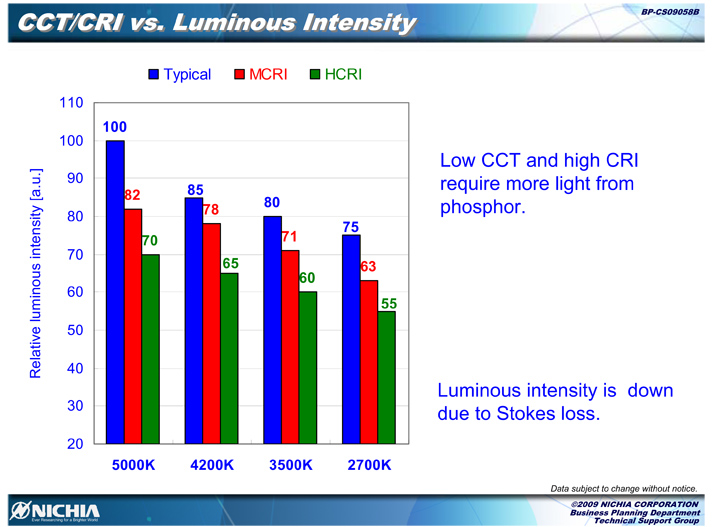

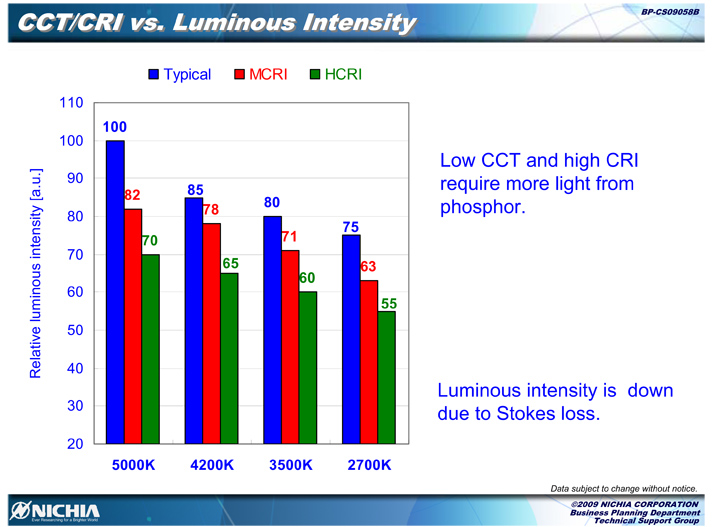

CRI is based on color temperature in the sense that it is a measure relative to a black body radiator of specific color temperature. A warm or cool color temperature tells you nothing about the CRI. The graph below that I pulled from a Nichia presentation shows the cost in lumens they experience based on color temperature (warmer CCT with the same CRI at the expense of lumens)

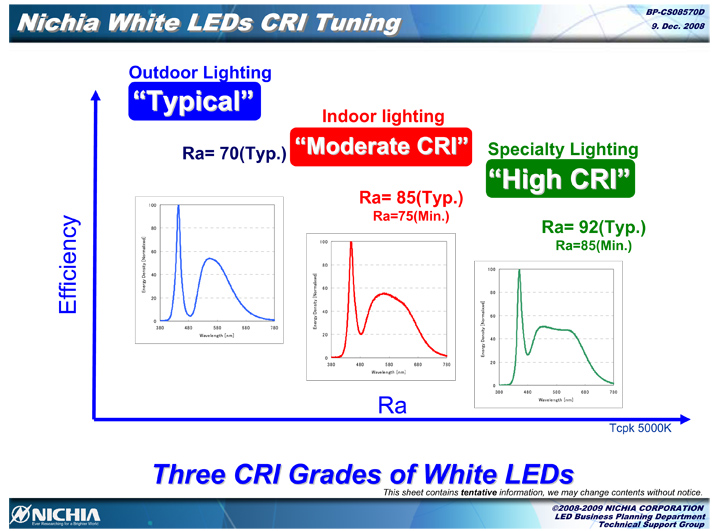

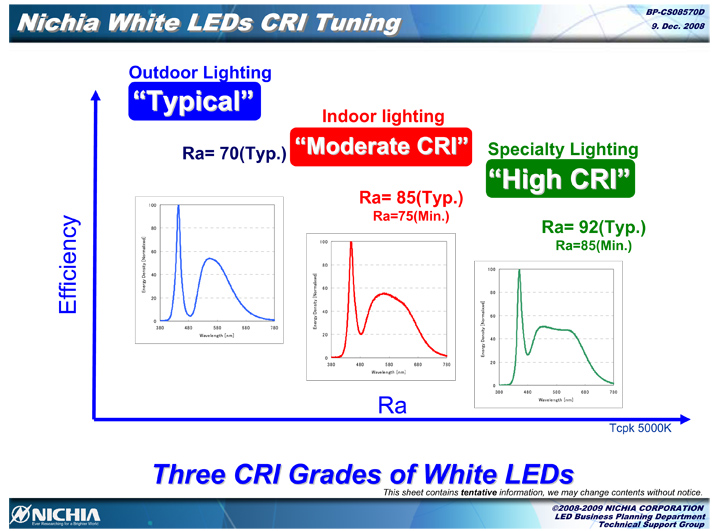

To make sense of the graph, I include the additional graph showing how Nichia has chosen to define "Typical", "Moderate" and "High" CRI:

So for example the lumen loss comparing a typical LED of 5000 CCT to another also of 5000 CCT but high CRI would be 100% down to 70%. If you compared the typical LED (5000k) to a warmer and also high CRI LED of 4200k you would go from 100% down to 65%.

I would guess that other LED manufacturers are dealing with similar phosphors and accordingly the same physics as outlined here by Nichia. If that is the case then using Cree LED's I think you could consider the blue or red bars as they relate to lumens based on relative CCT. :shrug:

To my knowledge, Cree does not bin based on CRI nor do they address CRI to the extent that some of the other LED manufacturers are at this point in time. I recently subjected a warm white Cree XP-G to my integrating sphere and measured 4480 CCT, CRI = 79 and 150 lumens (out the front at ~660 mA). The tint was warm for certain but to call this a high CRI light source would be misleading, IMHO. By the standards as detailed above, I would consider the Cree sample I mentioned as a moderate CRI LED.

The OP asks specifically about High CRI LED's. What is a High CRI LED or which of the LED's currently being used would qualify as such? :shrug:

To make sense of the graph, I include the additional graph showing how Nichia has chosen to define "Typical", "Moderate" and "High" CRI:

So for example the lumen loss comparing a typical LED of 5000 CCT to another also of 5000 CCT but high CRI would be 100% down to 70%. If you compared the typical LED (5000k) to a warmer and also high CRI LED of 4200k you would go from 100% down to 65%.

I would guess that other LED manufacturers are dealing with similar phosphors and accordingly the same physics as outlined here by Nichia. If that is the case then using Cree LED's I think you could consider the blue or red bars as they relate to lumens based on relative CCT. :shrug:

To my knowledge, Cree does not bin based on CRI nor do they address CRI to the extent that some of the other LED manufacturers are at this point in time. I recently subjected a warm white Cree XP-G to my integrating sphere and measured 4480 CCT, CRI = 79 and 150 lumens (out the front at ~660 mA). The tint was warm for certain but to call this a high CRI light source would be misleading, IMHO. By the standards as detailed above, I would consider the Cree sample I mentioned as a moderate CRI LED.

The OP asks specifically about High CRI LED's. What is a High CRI LED or which of the LED's currently being used would qualify as such? :shrug:

How bright are high CRI LEDs? If I would like somebody to make for me a triple or quad P60 style dropin with those, what amount of OTF lumens could I expect from 2x18650 powered light?

Based on what McGizmo said, I have a moderately high CRI XPE triple that gets around 500 OTF lumens. They are XPE P4 7A in the 3000K color temp with a CRI around 80.

Check out my sigline.

That 500 lumen number comes from the sales thread I purchased it from. I did not measure it myself but comparing it to a M60W MCE it was pretty close to it but with a much warmer color temp.

Colorblinded

Enlightened

Based on the research I've done to date, it seems that finding a truly high CRI LED flashlight with good output (at least 200-250 lumens OTF) (which I could really use) is rather challenging if not impossible barring some kind of ridiculous budget.

Fortunately I haven't been looking for long, but i don't have my hopes up at the moment based on what I've found from most manufacturers.

Fortunately I haven't been looking for long, but i don't have my hopes up at the moment based on what I've found from most manufacturers.

Henk_Lu

Flashlight Enthusiast

How bright are high CRI LEDs? If I would like somebody to make for me a triple or quad P60 style dropin with those, what amount of OTF lumens could I expect from 2x18650 powered light?

What about an SST90/3000K high CRI? :wave:

jimmy1970

Flashlight Enthusiast

I've got a Malkoff W MC-E M60 that has a tint colour almost identical to the eye as my Sundrop 3S. The colour rendition is very similar but not exact.

It is hard to quantify CRI by eye when you also have to consider the tint as well.

James....

It is hard to quantify CRI by eye when you also have to consider the tint as well.

James....

hoongern

Enlightened

What about an SST90/3000K high CRI? :wave:

There are no SST90s with high CRIs, unfortunately. The 3000Ks only achieve a moderate CRI of 83. Not the greatest when you compare it to some of Nichia's offerings i.e. Nichia 083/183 which go up to 96 CRI - and at CCTs closer to sunlight! (Hence the Sundrop)

Last edited:

Similar threads

- Replies

- 35

- Views

- 1K

- Replies

- 7

- Views

- 613

- Replies

- 4

- Views

- 537